~ 3 min read

Hugging Face Transformer - The super data analysis library

Hugging Face Transformer is an open-source machine-learning library that provides pre-trained models for natural language processing (NLP). It was developed by Hugging Face, a startup company that focuses on developing and maintaining state-of-the-art machine learning models for NLP.

The library provides a simple and easy-to-use interface for developers to use pre-trained models for a variety of tasks, such as text classification, text generation, machine translation, and more. Hugging Face Transformer is built on top of PyTorch and TensorFlow, two popular deep-learning frameworks.

Features

One of the key features of Hugging Face Transformer is its Transformer architecture, which is a deep learning architecture that was introduced in the paper “Attention Is All You Need” by Vaswani et al. (2017). The Transformer architecture has become the standard for many NLP tasks, such as language modeling, machine translation, and sentiment analysis, due to its ability to handle long-range dependencies in text.

Hugging Face Transformer provides pre-trained models for a wide range of NLP tasks, including BERT, GPT-2, RoBERTa, and more. These models have achieved state-of-the-art performance on many benchmark datasets, and they can be fine-tuned for specific tasks with just a few lines of code.

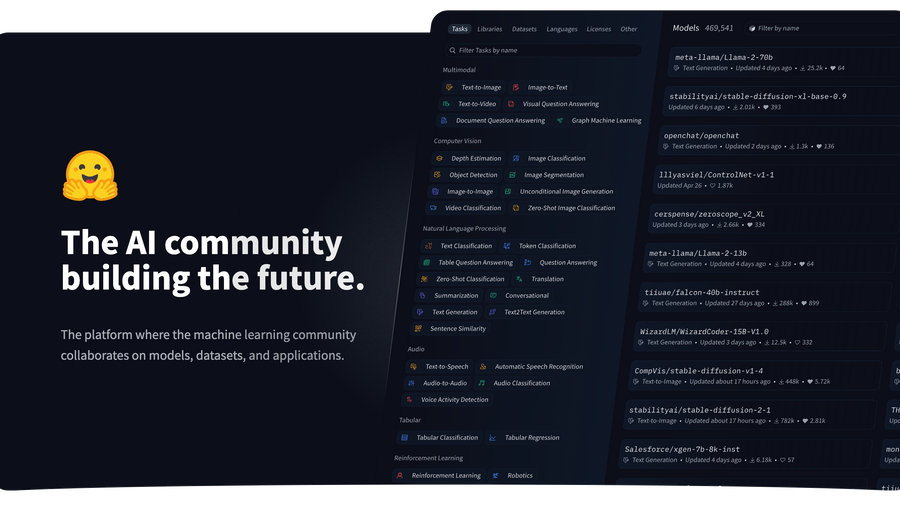

In addition to pre-trained models, Hugging Face Transformer also provides a community-driven model hub, where developers can share and download pre-trained models for specific tasks. This model hub has become a popular resource for the NLP community, and it has helped to accelerate research and development in the field.

Overall, Hugging Face Transformer is a powerful and easy-to-use library for NLP tasks, and it has become a popular choice for developers and researchers in the field.

Example Usage

Here’s an example of using Hugging Face Transformer in a FastAPI project. Lets create a python main.py file:

from fastapi import FastAPI

from transformers import pipeline

app = FastAPI()

# Load the sentiment analysis pipeline

sentiment_analysis = pipeline("sentiment-analysis")

@app.post("/analyze_sentiment")

async def analyze_sentiment(text: str):

# Call the sentiment analysis pipeline on the given text

result = sentiment_analysis(text)

# Return the sentiment analysis result as JSON

return {"text": text, "sentiment": result[0]["label"], "score": result[0]["score"]}

This code defines a FastAPI endpoint that uses the Hugging Face sentiment analysis pipeline to analyze the sentiment of a given text. When a POST request is sent to the /analyze_sentiment endpoint with a text parameter, the pipeline is called on the given text, and the sentiment analysis result is returned as a JSON response.

To run this code, make sure you have the fastapi and transformers Python packages installed.

pip install transformers pip install fastapipip install 'uvicorn[standard]'And then run the file with a command like uvicorn main:app --reload

This is just one example of how the Hugging Face Transformer can be used in a Python project. The library provides many other pre-trained models and pipelines that can be used for a variety of NLP tasks.